Recent years have brought great progress in the field of computer vision, especially in deep learning based object detection tasks. Developed methods typically assume that a large amount of labeled training data is available, and training and test data are drawn from an identical distribution. However, the two assumptions are not always held in practice. To be more precise – usually they are not. How to handle such situations and build robust detectors adapting well to new environments? How to use cross-domain object detection in solving real-world problems?

In our previous post, we described deep learning based object detection algorithms which can act in one-stage or two-stage manner. These methods achieve the main goals of detectors – predictions of objects’ locations and the assignment of detected objects to proper categories. In today’s post, we will present another computer vision task related to object detection algorithms, one which tackles the domain shift problem, namely Cross Domain Object Detection (CDOD). CDOD was born as a new branch of deep learning to meet the above listed challenges. This post aims to describe the state-of-the-art approaches in cross domain object detection tasks.

Domain adaptation in object detection task

Imagine a situation where you train your model to detect common objects like buildings, cars, or pedestrians on a Cityscapes Dataset [1] with a large number of annotated objects from German streets. Then, you test your model on a test subset of the dataset (daytime images), and everything seems to perform well. Next, you test the same detector on some night-time or foggy images – ultimately a car detection system is supposed to work in a variety of driving scenarios – and you realize that your model is not able to properly detect pedestrians or cars anymore (see Fig. 1.).

The reason for the above problem is the change of the test images’ domain. The model was trained on source distribution (daily scenes), but tested in different target distribution (nightly or foggy scenes). Here, domain adaptation (DA) comes to the rescue. DA is an important and challenging problem in computer vision. The primary goal of the unsupervised version of the method is to learn the conditional distribution of images in the target domain based on given images in the source domain, without seeing any examples of corresponding image pairs. There are also adaptive methods using paired pictures (the same shot in different scenarios) from a few domains [3], but in the case of simple image-to-image translation they are not very popular.

Cross-Domain Object Detection – daytime and nighttime

Fig. 1. Adapting a pedestrian detector trained on labeled images from daytime conditions to unlabeled images from other conditions. Adapted from Ref. [2].

Fig. 1. Adapting a pedestrian detector trained on labeled images from daytime conditions to unlabeled images from other conditions. Adapted from Ref. [2].

DA may occur in two ways: by direct transfer between one domain and the other, when two domains are similar to each other (one-step domain adaptation), or step by step, going from one domain to another through several intermediate domains, in the case of little similarity between the source and the target (multi-step domain adaptation) [4].

Following the success in image classification tasks brought by domain adaptation techniques, it was expected that DA would also improve the performance of object detection tasks. In this task, we have to take into consideration the amount of labeled data in the target domain (assuming we have a lot of labeled images from the source domain), and classify the type of cross domain object detection as:

- fully-supervised: all target data is annotated,

- semi-supervised: only some part of target training dataset is annotated,

- weakly-supervised: we deal with some poor annotation type, eg. point-like,

- unsupervised: target data is not annotated.

Mechanisms to address domain shift

There are multiple approaches to performing domain adaptation in case of object detection. The following summary is mostly based on the review paper by Li et al. [5]. In their review, the authors distinguish between different types of domain adaptation, mainly depending on techniques used to prepare domain shift, namely discrepancy-based, reconstruction-based, adversarial-based, hybrid, and others (uncategorized).

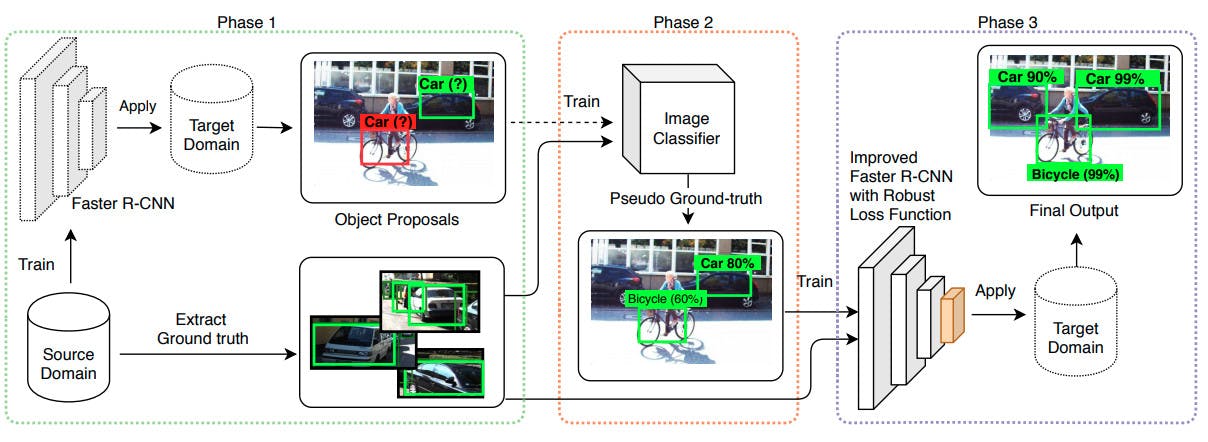

In discrepancy-based approaches, domain shift is decreased by fine-tuning the baseline detector network with target data. This data could be presented as labeled or unlabeled images. In the case of lacking annotations in the target domain in the first phase, we are supposed to create pseudo-labels by applying a detection module trained on labeled data in the source domain. The annotations obtained this way can be refined or can go straight to the last stage, where the baseline detector is fine-tuned (retrained) using the original labeled data from the source domain and pseudo-generated annotations in the target domain. Fig. 2. shows an example of discrepancy-based approach [6].

Object Detection Algorithms and its phases

Fig. 2. Discrepancy-based approach proposed in Ref. [6].

Fig. 2. Discrepancy-based approach proposed in Ref. [6].

On the other hand, reconstruction-based techniques assume that making the source samples similar to the target samples – or vice versa – is helpful in improving the performance of object detection. In such a case, the experiment could be divided into two significant parts. At the beginning, we should take care of generating artificial samples by translating images from one domain (usually source) to another (usually target). For that purpose, a model based on Cycle Generative Adversarial Networks (CycleGANs) can be explored. We described a given model in more detail in our post about generating uncommon artificial samples of bacteria colonies grown in Petri dishes . After the first step, the created dataset is used to train the detector model. In this stage, source image annotations are directly (like in the approach presented in Fig. 3.) or after some refinement transferred to the fake-generated images. Network performance can be enhanced by adding labeled photos from both domains [7].

Reconstruction-based system in Cross-Domain Object Detection

Fig. 3. An overview of the reconstruction-based system proposed in Ref. [7].

Fig. 3. An overview of the reconstruction-based system proposed in Ref. [7].

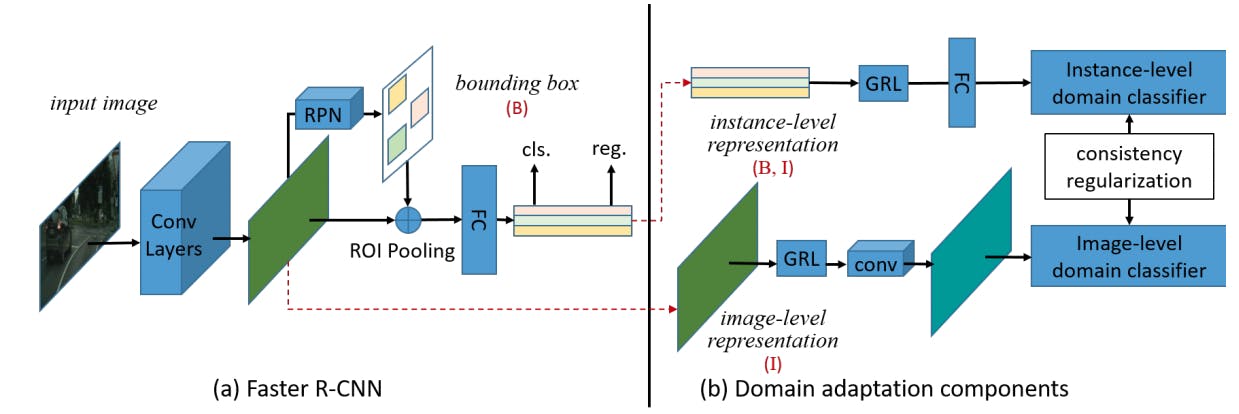

Adversarial-based methods next to the detector use domain discriminators to recognize which domain a data point is drawn from. The network conducts adversarial training to reduce the domain gap between the source and the target. Adaptation framework can be designed in many ways. For example, in Ref. [8] authors assume that adversarial training should be conducted on extracted features, and therefore prepare two adaptation components: one on the image level (image scale, image style, illumination, etc.), and one on the instance level (object appearance in its background, object’s size, etc.). The proposed framework is presented in Fig. 4.

Fig. 4. Overview of Domain Adaptive Faster R-CNN model from Ref. [8]. The network uses a standard adversarial training together with a reverse gradient layer (GRL) – sign of gradient is reversed when passing the layer during back propagation.

Fig. 4. Overview of Domain Adaptive Faster R-CNN model from Ref. [8]. The network uses a standard adversarial training together with a reverse gradient layer (GRL) – sign of gradient is reversed when passing the layer during back propagation.

Of course, some authors [9] combine several of the above-mentioned mechanisms, and this technique usually results in better performance. Mechanisms based on discrepancy and reconstruction are the most popular choice for creating this type of hybrids. There are also several other methods that the authors of the article [5] did not manage to fit into any of the above categories. Here, as an example, graph-induced prototype alignment [10] can be mentioned.

Closing remarks

Standard neural networks trained on photos drawn from one domain fail in the case of detection performed on images with different illumination conditions or scenery. In order to remedy this, several methods allowing cross domain object detection have been proposed in recent years. To a large extent, they adapt photos from one domain to look like photos from another domain, and then perform object detection.

Domain adaptation in CDOD also concerns synthetic to real image translation. In the case of only a few samples in the target domain, photos can be generated using some classical approaches, such as putting some cut-to-size objects on diversity photos from common datasets, and then adapting them to look realistic. In the next step, the above-mentioned mechanisms conducting cross-domain detection enable the recognition of objects in the target domain for which annotations could be expensive to collect.

On the other hand, a single input image may correspond to multiple possible outputs in DA step, and here the problem of universal/multi-domain object detection appears. Proper design of universal detectors, which eliminate this problem, is still an open issue.

We hope you enjoyed the reading. If you are looking for more details on object detection solutions , feel free to reach us for more tailored recommendations.

Literature

[1] Cordts, Marius, et al. “The Cityscapes Dataset for Semantic Urban Scene Understanding.” in Proc. of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2016)

[2] RoyChowdhury, Aruni. et al. “Automatic adaptation of object detectors to new domains using self-training”. CVPR (2019)

[3] Zhu, Jun-Yan , et al. “Toward Multimodal Image-to-Image Translation.” CoRR in: arXiv preprint arXiv:1711.11586 (2017)

[4] Wang, Mei, et al. “Deep visual domain adaptation: A survey.” Neurocomputing, vol. 312, pp. 135-153 (2018)

[5] Li, Wanyi, et al. “Deep Domain Adaptive Object Detection: a survey.” CoRR in: arXiv preprint arXiv:2002.06797 (2020)

[6] Khodabandeh, Mehran, et al., “A Robust Learning Approach to Domain Adaptive Object Detection”. ICCV (2019)

[7] Arruda, Vinicius F., et al., “Cross-Domain Car Detection Using Unsupervised Image-to-Image Translation: From Day to Night,” in IJCNN (2019)

[8] Chen, Yuhua, et al. “Domain Adaptive Faster R-CNN for Object Detection in the Wild”. CVPR (2018)

[9] Inoue, Naoto, et al. “Cross-Domain Weakly-Supervised Object Detection through Progressive Domain Adaptation”. CVPR (2018)

[10] Xu, Minghao, et al. “Cross-domain Detection via Graph-induced Prototype Alignment.” CVPR (2020)